Maximizing Convolutional Neural Network (CNN) Accelerator Efficiency Through Resource Partitioning

A new CNN accelerator that achieves a 3.8x higher throughput than the state-of-the-art accelerators by using multiple processors

Convolutional Neural Networks (CNNs) are revolutionizing the field of machine learning, being used in areas such as recommendation systems, natural language processing, and computer vision. However, they have computational challenges that need to be accounted for through the use of accelerators. Improvements to these CNN accelerators are being made consistently every year, however, as improvements have rapidly increased, so has computation cost and the difficulty to find computing platforms that can keep up with CNN accelerators. Multi-core CPUs are no longer a viable option, and using a GPU has its own problems with power consumption. Therefore, the use of field-programmable gate arrays (FPGA) has been implemented. Current CNN accelerators using FPGA accelerators revolve around using a single processor to compute the CNN layers individually, where the processor is optimized to maximize the throughput at which the collection of layers is computed. However, this has lead to a lot of inefficiency, due to the fact that only one processor is being used to compute multiple CNN layers of radically different dimensions. Looking at CNN accelerators from an efficiency perspective, there needs to be a new improvement established that is efficient relative to the cost of it.

This technology focuses on a CNN accelerator that uses FPGA resources to significantly increase computational efficiency. This new accelerator paradigm and the automated design methodology partitions the available FPGA resources into multiple convolutional layer processors (CLP), and each processor is made for different subsets of the CNN convolutional layers. By utilizing the same FPGA resources you would use in a single large processor, in multiple smaller, specialized processors, consequently increases the computational efficiency and leads to a higher overall throughput. Each processor operates on multiple images at the same time. An optimization algorithm is also used to compute the partitioning of the FPGA resources into multiple CPLs for a highly efficient performance plan. It only takes minutes for the algorithm to run and it outputs a set of dimensions for the CLPs which are then combined to make a complete CNN accelerator.

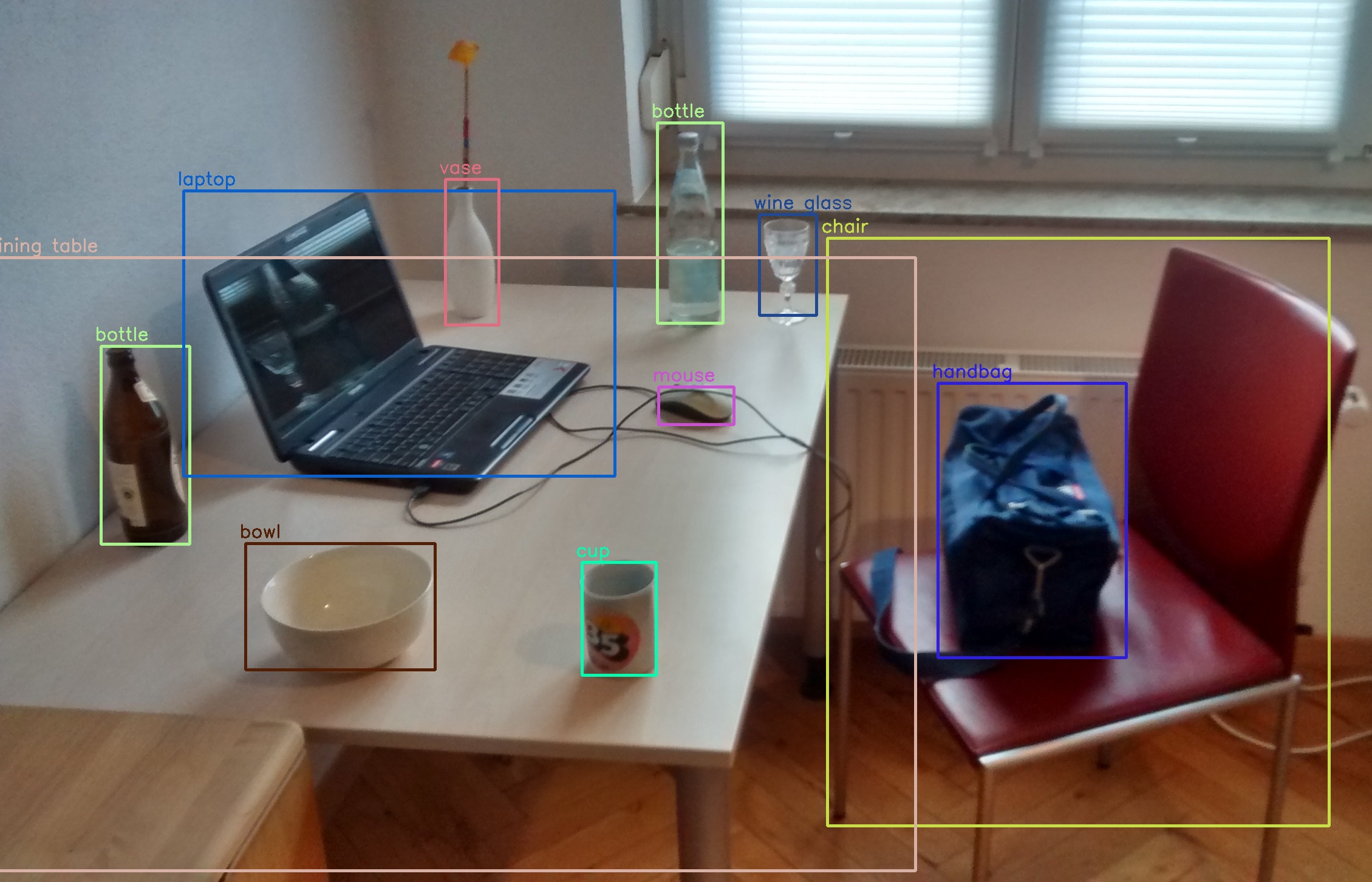

Source: MTheiler, https://commons.wikimedia.org/wiki/File:Detected-with-YOLO--Schreibtisch-mit-Objekten.jpg, CC BY-SA 4.0.

Source: MTheiler, https://commons.wikimedia.org/wiki/File:Detected-with-YOLO--Schreibtisch-mit-Objekten.jpg, CC BY-SA 4.0.

- Achieves a 3.8x higher throughput than the state of the art CNN accelerators - More cost-effective than conventional accelerators - Experimentation has shown that this method can lead to 90-100% arithmetic unit use, thus minimizing the inefficiency

This technology is applied to CNNs to increase their performance and efficiency. CNNs are primarily focused on image object identification.

Provisional patent

62/854,765

Available for Licensing

Development partner,Commercial partner,Licensing

Patent Information:

| App Type |

Country |

Serial No. |

Patent No. |

Patent Status |

File Date |

Issued Date |

Expire Date |

|